A web callback URL, also known as a

redirect URI

in the context of OAuth2, is a specific URL to which a web application or service redirects a user after a particular interaction or process is completed.

This is crucial for enabling communication and data exchange between different web services.

OAuth2 Secured Element Traces Tasked.

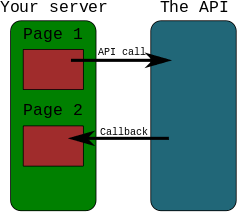

A web callback URL (also known as a redirect URI or postback URL) is a specific internet address provided by one application to an external service, which the external service then calls (sends an HTTP request to) to provide information or a notification once a specific event or asynchronous process has been completed.

How It Works

The core function of a callback URL is to facilitate asynchronous communication between two different systems without requiring the initial application to continuously wait or “poll” the external service for a response.

- Initiation: Application A sends a request to an external service (Application B) to perform a task (e.g., process a payment, authenticate a user, or run a lengthy report). As part of this request, Application A includes a specific callback URL where it wishes to be notified of the outcome.

- Processing: Application B processes the request. This may take a few seconds or several minutes, and the connection with Application A is closed in the meantime.

- Notification: Once Application B has completed the task, it sends an HTTP request (typically a POST or GET request) to the callback URL provided by Application A. This request usually includes a payload of data, such as a transaction status or an authentication token.

- Action: Application A receives the notification at the specified callback URL endpoint and processes the information, triggering subsequent actions such as displaying a success page to a user or updating a database record

Common Use Cases

Callback URLs are a foundational concept in web integrations and are used in various scenarios:

- OAuth Authentication: After a user successfully authenticates with a third-party service (like Google, Facebook, or Twitter), the service redirects the user’s browser back to the application’s designated callback URL, often including an authorization code or token as a query parameter.

- Payment Gateways: Once a user completes an online payment on a third-party payment processor’s website (e.g., PayPal), the processor sends the user back to the merchant’s website using a callback URL to display an order confirmation or “thank you” page.

- Asynchronous API Operations: For time-consuming API tasks like file processing or bulk SMS sending, an API might accept a callback URL to notify the calling application when the operation is complete, rather than forcing the initial connection to remain open.

- Webhooks: In the context of webhooks, a callback URL is the designated endpoint that receives real-time event notifications from a provider whenever specific events occur (e.g., a new user signs up or a file is translated).

AI can make mistakes, so double-check responses

- Callback URL | Svix ResourcesThe Role of Callback URLs in Web Integrations and Webhooks. Callback URLs play a pivotal role in modern web integrations, providin…

Svix webhook

What is a callback URL in relation to an API? – Stack Overflow

Apr 27, 2014 — * 8 Answers. Sorted by: 126. A callback URL will be invoked by the API method you’re calling after it’s done. So if yo…

Stack Overflow

Callback URLs – Beeceptor

Dec 31, 2024 — Callback URLs. Callback URLs are a core mechanism for implementing asynchronous integration patterns. They enable seam…

Beeceptor

Show all

What are some best practices for securing callback URLs?

Thinking

Putting it all together

Securing callback URLs requires a defense-in-depth approach, combining several methods to ensure that requests are legitimate, confidential, and integral

.

Core Security Measures

- Always use HTTPS: Enforce HTTPS for all callback URLs to ensure data is encrypted in transit and protected from man-in-the-middle attacks. Avoid using HTTP, as the connection is unencrypted plain text.

- Implement source verification (HMAC signatures): This is the most crucial step. The sending service should generate a unique cryptographic signature (usually HMAC) for each payload using a shared secret key. Your endpoint must recalculate the signature upon receipt and compare it with the provided signature to verify the request’s authenticity and integrity (i.e., that it hasn’t been tampered with).

- Validate the source via IP whitelisting: If the external service provides a fixed range of IP addresses, configure firewall rules to only accept traffic from those specific IPs. This adds an extra layer of security against unauthorized requests from other sources.

- Add timestamps and prevent replay attacks: Include a timestamp in the signed payload. The receiving application should check if the request is recent (e.g., within a few minutes) and reject outdated requests. This prevents an attacker from recording a legitimate, signed request and “replaying” it later.

Additional Best Practices

- Register specific, exact URLs: When configuring callback URLs in the external service’s settings, use exact string matching. Avoid using wildcards (

*) or allowing arbitrary redirect URLs, as this can create open redirect vulnerabilities that attackers can exploit for phishing or token interception. - Use a unique

stateparameter (for OAuth): In authorization flows, generate a unique, cryptographically securestateparameter for each user session and validate it upon callback. This binds the request to the user’s specific session and defends against Cross-Site Request Forgery (CSRF) attacks. - Minimize sensitive data in the payload: Webhooks and callbacks are typically for notifications. Avoid sending highly sensitive information like passwords or full PII (Personally Identifiable Information) in the initial callback request. Instead, use an identifier and have your system fetch necessary sensitive data via a separate, authenticated API call.

- Implement robust logging and monitoring: Log all incoming callback requests (metadata only, without sensitive data) to track activity, debug issues, and detect anomalies or potential security incidents.

- Acknowledge events quickly: Your endpoint should respond with a quick

200 OKstatus code as soon as possible after validation, offloading any time-consuming processing to a background job or queue. This prevents the sending service from repeatedly retrying the request due to timeouts. - Rotate secrets regularly: Periodically rotate the shared secrets or keys used for signature verification to limit the impact of a potential compromise.

HMAC (Hash-based Message Authentication Code) signature generation is a process used to ensure the authenticity and integrity of a message by using a secret key. It provides strong security against tampering because only a party with the shared secret key can generate or verify a valid signature.

The HMAC Generation Process

The generation process involves a few key components and steps:

Components Needed

- A Shared Secret Key: A unique, cryptographically strong key known only to the sender and the receiver. This key should be generated randomly and stored securely (e.g., in environment variables or a secrets manager).

- A Cryptographic Hash Function: A standard hashing algorithm like SHA-256 (most common), SHA-512, or SHA-1 (less recommended due to known vulnerabilities).

- The Message/Payload: The actual data (e.g., the raw body of the HTTP request) being sent.

Step-by-Step Generation

The sending application follows these general steps to generate the signature:

- Prepare the Data: The raw request body (and sometimes other data like specific headers, timestamps, or the HTTP method) is collected in a specific, consistent order to form the “string to sign”. Any change in formatting (like extra spaces or different key ordering in a JSON body) will result in a different signature on the receiving end.

- Apply the HMAC Algorithm: The chosen cryptographic hash function is applied to the prepared data using the shared secret key. Most modern programming languages have built-in libraries (like Python’s

hmacmodule or Node.js’scryptomodule) to perform this operation. - Encode the Result: The resulting binary hash is typically encoded into a readable format, such as a hexadecimal string or a Base64-encoded string.

- Include in the Request: The final, encoded HMAC signature is included in the HTTP request, usually within a custom HTTP header (e.g.,

X-Hub-SignatureorX-Signature).

Verification on the Receiving End

When the callback URL receives the request, it performs the exact same process:

- It extracts the received signature from the header.

- It uses the locally stored, shared secret key and the received raw payload to independently calculate its own HMAC signature, using the identical algorithm and steps as the sender.

- It then compares its calculated signature with the signature provided in the request header using a secure comparison method (e.g.,

hmac.compare_digest()in Python to prevent timing attacks). - If the signatures match, the application can trust that the request originated from the legitimate source and that the data has not been tampered with. If they do not match, the request is rejected.

Give a specific example of how the ‘string to sign’ is created

Thinking

Putting it all together

The ‘string to sign’ (also known as the canonical string or payload to be hashed) is a precise combination of data elements from the request. The exact format is defined by the service sending the webhook and must be strictly followed by the recipient

.

The simplest and most common example involves using the entire raw request body as the ‘string to sign’.

Simple Example: Hashing the Request Body

Many providers, like Shopify, Stripe, and Dropbox, use this straightforward method.

Scenario

- Shared Secret Key:

wh_sec_12345ABCDEF - Hash Algorithm: HMAC-SHA256

- Request Method:

POST - Request Body (Raw JSON Payload):json

{ "event": "invoice.paid", "data": { "invoice_id": "inv_98765", "amount": 100.00 } }Use code with caution.

Creating the ‘String to Sign’

In this simple example, the entire raw JSON string, exactly as received (no pretty-printing, no extra whitespace), is the ‘string to sign’.

{

"event": "invoice.paid",

"data": {

"invoice_id": "inv_98765",

"amount": 100.00

}

}

The sending application takes this exact string and computes the HMAC-SHA256 hash using the shared secret key. The result (often Base64 or Hex encoded) is sent in an HTTP header, e.g., X-Stripe-Signature.

Complex Example: Combining Multiple Request Elements

Other providers, such as AWS or Google Cloud, use a more comprehensive approach to prevent a wider range of attacks (like an attacker reusing a valid body with a different URL). They combine several request components into the ‘string to sign’, typically separated by newline characters (\n).

Scenario

- Shared Secret Key:

api_secret_XYZ - Hash Algorithm: HMAC-SHA256

- Request Details:

- HTTP Method:

POST - Host:

api.example.com - Request URI:

/webhooks/callback?user_id=123 - Timestamp Header:

X-Api-Timestamp: 1678886400 - Request Body:

{"status": "processed"}

- HTTP Method:

-

Creating the ‘String to Sign’

The ‘string to sign’ is formed by concatenating these specific elements in a defined order, with newlines between them:

POST\n

api.example.com\n

/webhooks/callback?user_id=123\n

X-Api-Timestamp:1678886400\n

{"status": "processed"}

(Note: the header name is lowercased and no space exists after the colon in the combined string)

This concatenated string is then used with the secret key to generate the HMAC signature. This method ensures that if any part of the request is altered (e.g., the URL parameters or the timestamp), the signature verification will fail.

Implementing HMAC signature generation in Python is straightforward using the built-in hmac and hashlib libraries.

Here is a full example showing both the sender side (generation) and the receiver side (verification).

Prerequisites

You need a shared secret key. It’s best practice to load this from an environment variable rather than hardcoding it.

python

# In a real application, get this from environment variables

SECRET_KEY = b'your-super-secret-key-that-both-sides-share'

# Note: The key must be a byte string (hence the 'b' prefix)

Use code with caution.

Example: Sender Side (Generation)

This function simulates the external service that generates the webhook and signs the payload before sending it.

python

import hmac

import hashlib

import json

import base64

def generate_hmac_signature(secret_key, payload_bytes):

"""

Generates an HMAC-SHA256 signature for a given payload using a secret key.

"""

# The key is applied to the payload using the HMAC algorithm

h = hmac.new(secret_key, payload_bytes, hashlib.sha256)

# We get the digest (the signature) and encode it to hex for transmission

signature = h.hexdigest()

return signature

# 1. Define the raw JSON payload

data = {

"event": "user.created",

"user_id": "u_abc123",

"timestamp": 1678886400

}

# 2. Convert the dictionary to a JSON string, then encode it to bytes

# Note: Ensure consistent JSON serialization order if your service requires it

payload_bytes = json.dumps(data, separators=(',', ':')).encode('utf-8')

# 3. Generate the signature

generated_signature = generate_hmac_signature(SECRET_KEY, payload_bytes)

print(f"Payload to sign: {payload_bytes.decode('utf-8')}")

print(f"Generated Signature: {generated_signature}")

# The sender then sends the payload in the body of a POST request,

# and the signature in a header like 'X-Signature'.

# Header example: {'X-Signature': 'f3d4...'}

Use code with caution.

Example: Receiver Side (Verification)

This function simulates your web application’s callback endpoint. It verifies the incoming signature against the data it received.

python

import hmac

import hashlib

import time

def verify_hmac_signature(secret_key, received_payload_bytes, received_signature):

"""

Verifies an incoming signature.

"""

# Recalculate the signature using the received data and our local secret key

local_hash = hmac.new(secret_key, received_payload_bytes, hashlib.sha256)

local_signature = local_hash.hexdigest()

# Securely compare the two signatures

# hmac.compare_digest prevents timing attacks

if hmac.compare_digest(local_signature, received_signature):

print("VERIFICATION SUCCESSFUL: Signatures match.")

return True

else:

print("VERIFICATION FAILED: Signatures do not match.")

return False

# --- Simulation of receiving a webhook request ---

# We assume we received the following data in a POST request:

# The raw bytes received by our server:

received_payload = b'{"event":"user.created","user_id":"u_abc123","timestamp":1678886400}'

# The signature from the 'X-Signature' HTTP header:

signature_from_header = generated_signature # Using the one we just generated above

# --- Verification Step ---

is_verified = verify_hmac_signature(SECRET_KEY, received_payload, signature_from_header)

# Example of a failed verification with a tampered payload

tampered_payload = b'{"event":"user.created","user_id":"u_hacker_id","timestamp":1678886400}'

print("\nTesting tampered payload:")

verify_hmac_signature(SECRET_KEY, tampered_payload, signature_from_header)

Use code with caution.

Adding a timestamp to the signature generation helps mitigate replay attacks, where an attacker records a valid request and sends it again later.

The approach involves adding the current timestamp to the ‘string to sign’ and including it as a separate HTTP header in the request. The receiver then verifies both the signature and that the timestamp is recent.

Example: Adding a Timestamp in Python

We will modify the previous sender-side example to include the timestamp in the string used for hashing.

1. Modify the generate_hmac_signature function

We must update the function to accept the timestamp as a separate element to be included in the hashed string.

python

import hmac

import hashlib

import json

import time

SECRET_KEY = b'your-super-secret-key-that-both-sides-share'

def generate_hmac_signature_with_timestamp(secret_key, payload_bytes, timestamp):

"""

Generates an HMAC-SHA256 signature by combining timestamp and payload.

"""

# Create the 'string to sign' by concatenating the timestamp and the payload body,

# often separated by a period or colon (defined by the service provider's spec)

string_to_sign = f"{timestamp}.".encode('utf-8') + payload_bytes

print(f"String used for hashing: {string_to_sign.decode('utf-8')}")

h = hmac.new(secret_key, string_to_sign, hashlib.sha256)

signature = h.hexdigest()

return signature, string_to_sign

# 1. Define the raw JSON payload

data = {

"event": "invoice.paid",

"amount": 100.00

}

payload_bytes = json.dumps(data, separators=(',', ':')).encode('utf-8')

# 2. Get the current UNIX timestamp (integer seconds since epoch)

current_timestamp = int(time.time())

# 3. Generate the signature

generated_signature, signed_string = generate_hmac_signature_with_timestamp(

SECRET_KEY, payload_bytes, current_timestamp

)

print(f"\nGenerated Signature: {generated_signature}")

# The sender sends:

# HTTP Header 'X-Webhook-Timestamp': 1678886400 (example value)

# HTTP Header 'X-Signature': f3d4...

# HTTP Body: {"event":"invoice.paid","amount":100.00}

Use code with caution.

2. Verification on the Receiver Side (Timestamp & Signature Check)

The receiver now has two critical checks:

- Is the signature valid?

- Is the timestamp recent (within an acceptable tolerance, e.g., 5 minutes)?

python

import time

def verify_request_with_timestamp(secret_key, received_payload, received_sig, received_timestamp_str):

"""

Verifies both the signature and the recency of the timestamp.

"""

# 1. Check if the timestamp is recent

try:

received_timestamp = int(received_timestamp_str)

# Define an acceptable time tolerance (e.g., 5 minutes or 300 seconds)

time_tolerance = 300

if abs(time.time() - received_timestamp) > time_tolerance:

print(f"VERIFICATION FAILED: Timestamp is too old or in the future.")

return False

except ValueError:

print("VERIFICATION FAILED: Invalid timestamp format.")

return False

# 2. Recalculate the expected signature

string_to_sign = f"{received_timestamp}.".encode('utf-8') + received_payload

local_hash = hmac.new(secret_key, string_to_sign, hashlib.sha256)

local_signature = local_hash.hexdigest()

# 3. Securely compare signatures

if hmac.compare_digest(local_signature, received_sig):

print("VERIFICATION SUCCESSFUL: Signatures match and timestamp is valid.")

return True

else:

print("VERIFICATION FAILED: Signatures do not match.")

return False

# --- Simulation of a received request ---

# We use the data we generated earlier:

rcv_payload = b'{"event":"invoice.paid","amount":100.00}'

rcv_sig = generated_signature

rcv_timestamp = str(current_timestamp)

# --- Verification Step ---

verify_request_with_timestamp(SECRET_KEY, rcv_payload, rcv_sig, rcv_timestamp)

# Example of a replay attack (sending the same data 10 minutes later):

print("\nTesting a replay attack:")

old_timestamp = str(current_timestamp - 600) # 10 minutes ago

# The signature is still valid because it matches the old timestamp

verify_request_with_timestamp(SECRET_KEY, rcv_payload, rcv_sig, old_timestamp)

Use code with caution.

Clock skew occurs when the system clocks of the sender and receiver servers are not perfectly synchronized. This is a common issue in distributed systems, as it can cause legitimate requests to be rejected if the time difference exceeds the acceptable limit.

Here are the primary ways to handle clock skew issues:

1. Implement an Acceptable Time Tolerance (Leeway)

The most common and practical solution is to build a buffer, or “leeway,” into your verification logic. Instead of requiring the incoming timestamp to match your server’s time exactly, you allow a small window of difference.

- How it works: When verifying the timestamp, you check if the absolute difference between your server’s current time and the received timestamp falls within a specific tolerance window.

- Typical Tolerance: A common and recommended tolerance is 5 minutes (300 seconds). This is generally enough to cover typical network latency and minor clock drift, but small enough to still effectively prevent most replay attacks.

- Implementation: The Python verification example provided previously already incorporates this:python

time_tolerance = 300 # 5 minutes if abs(time.time() - received_timestamp) > time_tolerance: # Reject the requestUse code with caution.

Verify and Validate Webhook Signatures – Affirm-Home

A replay attack is when an attacker intercepts a valid payload and its signature, then re-transmits them. To mitigate such attacks…

Affirm

Clock Skew – Kerberos V5 System Administrator’s Guide – MIT

The default value for maximum clock skew is 300 seconds, or five minutes. If the host is not likely to be rebooted frequently, you…

Massachusetts Institute of Technology

2. Ensure Server Time Synchronization (NTP)

While tolerance is necessary for minor skews, the fundamental solution is to ensure all servers involved (both the sender’s and receiver’s infrastructure) are synchronized with a reliable time source using the Network Time Protocol (NTP).

- How it works: NTP is a protocol designed to synchronize computer clocks over a network. By using a public, reliable NTP server (like

pool.ntp.org), all servers maintain a highly accurate and consistent time reference, minimizing the actual clock skew. - Benefit: This approach reduces the need for large tolerance windows, allowing you to keep your time tolerance small for better security against replay attacks.

3. Provide Clear Error Messages

If a request is rejected due to a timestamp issue, the API provider should return a specific error code (e.g., HTTP 401 Unauthorized or 403 Forbidden) and, ideally, an informative message indicating a clock skew problem. This helps developers on the sending side quickly identify and fix the issue on their end (e.g., by checking their NTP settings).

4. Client-Side Clock Skew Correction (For SDKs)

For developers building SDKs for external clients, a more advanced strategy is to have the client application automatically detect a clock skew error.

- How it works: If the API server returns an error indicating a timestamp issue, the client SDK can calculate the time offset between its clock and the server’s clock, adjust its internal time calculation, and then automatically retry the request with the correct, synchronized time. This makes the process transparent for the end-user developer.

While HMAC signatures are the most widely recommended and used method for webhook security, several other robust alternatives exist, ranging from simpler shared secrets to more complex certificate-based authentication.

1. Asymmetric Key Encryption (Digital Signatures)

Instead of a single shared secret key (symmetric), this method uses a private key (known only to the sender) to sign the request and a public key (provided to the receiver) to verify it.

- Pros: This provides non-repudiation, meaning only the actual provider could have generated the signature. The receiver can verify the message but cannot forge one themselves, which is useful in multi-party scenarios. The public key can also be distributed openly without the risk of a full compromise.

- Cons: It is generally more computationally expensive than HMAC and requires a system for managing public keys.

- Used by: PayPal and SendGrid use variations of this approach.

Webhook Security in the Real World – Ngrok

Nov 14, 2022HMAC Key Rotation (with zero downtime) HMAC keys are no different than other encryption keys and should be rotated per…

Ngrok

Best Practices: Secure Webhook Endpoints with HMAC – Proposify

When it comes to transferring data via integrations, security is a top concern. To secure data being passed via webhooks (for even…

Proposify

2. OAuth 2.0 and JSON Web Tokens (JWTs)

This approach leverages established authorization frameworks. The webhook provider first authenticates with an OAuth authorization server to get a time-limited access token (a JWT), which is then included in the webhook request’s Authorization header.

- Pros: It uses industry-standard protocols, the tokens have built-in expiry times, and validation can be stateless.

- Cons: It adds complexity, requiring an Identity Provider (IdP) infrastructure. There is also no single standard implementation across providers, leading to inconsistency.

- Used by: Wix, Janrain (Akamai), Plaid, and SendGrid use or provide options for JWT/OAuth security.

3. Mutual TLS (mTLS) Authentication

mTLS provides strong, bi-directional authentication at the network level. During the TLS handshake, both the client (sender) and server (receiver) must present valid X.509 certificates issued by a trusted Certificate Authority.

- Pros: This offers the highest level of security and guarantees the identity of both parties before any application data is sent.

- Cons: It is complex to configure, deploy, and maintain, especially concerning certificate issuance, renewal, and revocation across multiple endpoints. It is generally considered overkill for most general webhook use cases.

4. Simpler Authentication Headers (Bearer Tokens, Basic Auth, API Keys)

A less secure but simpler alternative is using a static secret key, Bearer token, or Basic Authentication credentials sent in the Authorization header.

- Pros: Very simple to implement on both the sender and receiver sides. Can often be handled by API gateways or firewalls.

- Cons: The secret itself is transmitted with every request (even over HTTPS), which makes it more vulnerable if intercepted or logged incorrectly. This method also does not ensure message integrity; a third party could capture the token and send a valid-looking but malicious payload.

5. IP Whitelisting

This is a network-level control where the receiving server only accepts traffic from a predefined list of IP addresses provided by the webhook sender.

- Pros: Easy to implement at the firewall or infrastructure level and effective against traffic from completely unknown sources.

- Cons: Inflexible if the sending service changes its IPs frequently (e.g., cloud environments). It can also still be vulnerable if an attacker manages to compromise a whitelisted IP address. It’s best used as a complementary security layer, not the primary method of authentication.

mTLS (Mutual Transport Layer Security) authentication is beneficial for webhooks in scenarios that require a high degree of trust, strong regulatory compliance, and control over both the sending and receiving environments.

While HMAC signatures are sufficient for most general-purpose webhooks (like e-commerce payments), mTLS provides an added layer of security that guarantees the identity of both parties before any data is exchanged.

Key Scenarios Where mTLS is Beneficial

- Highly Regulated Industries (Finance, Healthcare, etc.): In sectors like Open Banking or healthcare systems, stringent compliance requirements often mandate the strongest possible authentication and non-repudiation (guaranteeing that the sender’s identity cannot be forged).

- Business-to-Business (B2B) Integrations: For direct, dedicated communication channels between known business partners where both sides need absolute assurance of the other’s identity, mTLS is ideal.

- Internal Microservice Communication (Zero Trust Environments): Within a corporate network or service mesh built on a “Zero Trust” security model (where no internal service is trusted by default), mTLS is used extensively to verify every single service-to-service connection.

- Securing Critical Infrastructure or IoT Devices: In Internet of Things (IoT) applications or operational technology (OT) systems (e.g., energy grids, industrial automation), mTLS ensures that only authorized devices can communicate with central services.

- Need for the Highest Level of Confidence: When the data being transferred is exceptionally sensitive or consequential (e.g., triggering a multi-million dollar transaction or affecting life-critical systems), the added assurance of certificate-based, bidirectional authentication outweighs the operational complexity.

- IP Address Flexibility: If the sending service operates in a cloud environment where its source IP addresses are dynamic and change often, mTLS provides a robust authentication mechanism that doesn’t rely on IP whitelisting.

Summary of mTLS Benefits in these contexts

| Benefit |

|---|

| Description | |

|---|---|

| Mutual Authentication | Unlike standard TLS (which only verifies the server), mTLS verifies both the client’s and the server’s identity using certificates. |

| Strongest Assurance | Provides non-repudiation; an attacker cannot simply steal a shared secret key (as in HMAC) to forge a message. |

| Network-Level Control | The authentication happens at the initial TLS handshake level, potentially blocking unauthorized connections before they even reach the application layer. |

| Alignment with Zero Trust | Fits naturally into modern security architectures that require strict verification of every interaction. |

The primary drawback of using mTLS for webhook authentication is the significant operational overhead and complexity it introduces compared to simpler methods like HMAC signatures.

These overheads manifest in several key areas:

1. Certificate Lifecycle Management

The biggest challenge of mTLS is managing the full Public Key Infrastructure (PKI) lifecycle. This includes:

- Issuance and Distribution: Both the webhook provider and consumer must generate, issue, and securely distribute client-side certificates. This process is often manual and complex to automate reliably.

- Renewal/Rotation: Certificates have expiration dates (often short-lived, e.g., 90 days). The renewal process must be flawlessly executed on both the sending and receiving systems before expiration; a single failure results in an immediate communication outage.

- Revocation: Mechanisms for revoking compromised certificates (using Certificate Revocation Lists or OCSP) add another layer of complexity and potential latency to the verification process.

2. Configuration Complexity

Setting up mTLS requires expertise in cryptography and PKI.

- Infrastructure Configuration: Configuring web servers, API gateways, load balancers, and firewalls to correctly handle the two-way handshake and certificate validation can be daunting and error-prone.

- Compatibility Issues: Not all services, platforms, or legacy tech stacks support mTLS out of the box, requiring custom development or workarounds.

- Environment Setup: Setting up local development and testing environments to correctly emulate mTLS can be difficult, which slows down development cycles.

3. Troubleshooting Difficulties

When communication fails, mTLS makes the troubleshooting process much more difficult than a failed signature check.

- Obscure Errors: Handshake failures due to expired, invalid, or misconfigured certificates often result in generic connection timeouts or TLS errors, making it hard to pinpoint the exact cause quickly.

- Requires Cross-Team Coordination: Troubleshooting typically involves coordination between multiple teams (security, networking, development) across potentially two different organizations (the sender and the receiver), increasing resolution time.

4. Scalability Challenges

As an infrastructure grows, managing certificates for numerous independent integrations becomes a significant burden.

- Per-Client Certificates: For security, you ideally need a unique client certificate for each consumer to limit the blast radius if one is compromised. Managing hundreds or thousands of unique certificates is a massive operational task.

- Automated Systems: While tools like service meshes (in microservice environments) can automate mTLS, they introduce their own operational overhead and steep learning curves.

5. Performance Impact

The additional cryptographic operations (extra authentication and validation steps during the handshake) add a small amount of latency and increase CPU usage compared to simple signature verification.

Due to these significant operational overheads, mTLS is generally considered overkill for most general webhook use cases. HMAC signatures are usually preferred as a simpler, broadly compatible, and scalable solution for authentication and integrity checking.

The benefits of mTLS outweigh its operational overhead for webhooks specifically when the potential risk of a security breach or a forged request is significantly higher than the cost and complexity of maintaining a Public Key Infrastructure (PKI).

This trade-off is often justified in scenarios where the security failure could lead to catastrophic financial loss, severe regulatory penalties, or critical infrastructure failure.

Here are the specific situations where mTLS benefits become decisive:

1. Handling High-Value Financial Transactions or PII

If the webhooks are part of a system that transfers critical data, the highest level of assurance is required.

- Financial Services (Open Banking, Payment Processors): Regulators may mandate the use of mTLS (or equivalent high-assurance methods) to guarantee the identity of every entity involved in a transaction stream. A forged or intercepted webhook could trigger large money transfers or expose massive amounts of PII.

- Healthcare Systems: Webhooks dealing with electronic health records (EHR) must comply with regulations like HIPAA, where the legal penalties for a data breach are substantial.

2. Operating in Zero-Trust Architectures

In modern security environments that assume a perimeter breach is inevitable, internal networks are designed around a “zero-trust” model.

- Service-to-Service Communication: When microservices communicate via webhooks internally, mTLS is often the standard method to verify every connection. The overhead is mitigated by using service mesh technologies (like Istio or Linkerd) that automate certificate management.

- Internal Systems: The risk of an internal bad actor or an advanced persistent threat (APT) moving laterally through the network makes the strong, mutual verification of mTLS necessary.

3. Requiring Non-Repudiation

Non-repudiation is the inability of the sender to deny that they sent a specific message. This is difficult to achieve perfectly with HMAC but inherent in mTLS.

- Legal or Auditing Requirements: If an audit trail must legally prove that a specific request came only from a certified source, the certificate-based identification provided by mTLS is superior to the shared-secret model of HMAC.

4. Environments where IP Addresses are Unreliable for Verification

If a provider cannot offer a stable list of static IP addresses for whitelisting (common with serverless or cloud functions), mTLS provides a robust alternative authentication mechanism that relies on identity rather than network location.

In summary, you should accept the operational overhead of mTLS when a security compromise is considered an existential threat to the business or customer trust, and where regulatory compliance demands the strongest available authentication method. For the majority of consumer-facing web services, HMAC remains the preferred and more practical choice.

A service mesh is a dedicated infrastructure layer that handles service-to-service communication within a microservice architecture. It manages the complex challenges of networking, security, and observability between the numerous services that make up a modern application.

It simplifies mTLS by automating the entire Public Key Infrastructure (PKI) lifecycle and handling the configuration transparently for the application developers.

Key Components of a Service Mesh

A service mesh is generally composed of two planes:

- Data Plane: This is made up of “sidecar proxies” (like Envoy) that run alongside each service instance. All incoming and outgoing network traffic flows through these proxies. The proxies are responsible for enforcing security policies, routing traffic, and collecting telemetry data.

- Control Plane: This layer manages and configures all the proxies in the data plane. It distributes security policies, aggregates monitoring data, and importantly, manages certificates.

How a Service Mesh Simplifies mTLS

Without a service mesh, a developer or operator would have to manually manage certificate generation, distribution, and rotation for every single application. A service mesh automates this complex, error-prone process:

- Automated Certificate Provisioning: The control plane includes a Certificate Authority (CA) that automatically generates unique, short-lived (e.g., 90-day, 24-hour, or even shorter) mTLS certificates for every service proxy instance.

- Automatic Certificate Rotation: The service mesh handles the entire renewal process silently in the background, without any application downtime. It proactively rotates certificates before they expire, eliminating the biggest operational challenge of manual PKI management.

- Transparent Authentication: The sidecar proxies handle the mTLS handshake process automatically. The application code itself doesn’t need to know anything about certificates, key management, or the mTLS protocol; it just makes standard, unencrypted network calls to its local sidecar proxy, which then encrypts and authenticates the traffic to the destination service’s proxy.

- Centralized Policy Management: Security rules (e.g., “Service A can talk to Service B, but not Service C”) are defined centrally in the control plane and pushed out to all relevant proxies.

- Simplified Troubleshooting: The service mesh provides centralized dashboards and logging that specifically monitor the health of mTLS connections, making it easier to identify and debug authentication failures than traditional methods.

In essence, a service mesh removes the operational overhead of mTLS by abstracting the entire security layer away from the application logic and operations teams, making robust, mutual authentication a default setting rather than a complex engineering project.

A service mesh’s sidecar proxy handles the mTLS handshake without requiring application code changes by acting as an interceptor and terminator for all network traffic. The entire process is abstracted from the application logic, making it transparent to the developer.

Here is a step-by-step breakdown of how this process works:

1. Interception and Redirection (Transparent Proxying)

When a microservice application attempts to make an outbound network call (e.g., Service A calling Service B), the operating system’s networking rules (often using iptables in Linux environments) are configured to automatically redirect that traffic away from its intended destination and into the local sidecar proxy (Proxy A).

The application code continues to use simple, standard network calls (e.g., http://service-b:8080), completely unaware that its traffic is being diverted.

2. Initiation of the mTLS Handshake (Sender Side)

- Proxy A intercepts the request.

- The proxy reaches out to the destination service’s proxy (Proxy B).

- During the connection setup, Proxy A initiates the mTLS handshake using the short-lived certificate and private key that the service mesh control plane automatically provisioned for it. It presents its client certificate to Proxy B.

3. Mutual Verification (Receiver Side)

- Proxy B receives the request and examines the client certificate provided by Proxy A.

- Proxy B performs two critical checks:

- It verifies that the certificate is valid, not expired, and issued by a trusted Certificate Authority (CA) managed by the service mesh control plane.

- It also checks its configuration policies (pushed by the control plane) to ensure that “Service A” is authorized to communicate with “Service B”.

- Simultaneously, Proxy B presents its own server certificate to Proxy A, completing the “mutual” part of the handshake.

4. Encryption and Secure Tunnel Establishment

Once both proxies have successfully verified each other’s identities and confirmed authorization, a secure, encrypted tunnel is established between them.

5. Forwarding the Application Request

Only after the secure tunnel is established does Proxy A send the original, raw application-level HTTP request (which was unencrypted when the application originally sent it to the proxy) into the encrypted tunnel.

6. Decryption and Delivery (Receiver Side)

- Proxy B receives the encrypted data, decrypts it, and forwards the now-plaintext request to the local Service B application instance.

- Service B processes the request as a standard, simple HTTP call, completely unaware that mTLS authentication and encryption occurred between the proxies.

By handling the entire cryptographic and authentication process at the infrastructure layer, the service mesh ensures security is applied by default without burdening the application developers with the complexity of certificate management or protocol implementation.

The service mesh control plane acts as a central Certificate Authority (CA) to distribute certificates to the sidecar proxies through an automated, secure process that requires no manual intervention. This system is designed for massive scale and frequent certificate rotation.

The distribution process typically relies on an API or agent running alongside the proxy, such as the Secret Discovery Service (SDS) API in the context of Envoy proxies used by Istio and others.

Here is an elaboration of the typical flow:

1. Workload Identity and Bootstrapping

- When a new application instance (e.g., a Kubernetes pod with its sidecar proxy) starts up, the service mesh agent (a small process running alongside the main application container and the proxy container) uses the underlying platform’s identity mechanism (like a Kubernetes Service Account token) to authenticate itself with the control plane.

- It sends its credentials to the control plane’s CA service.

2. Certificate Signing Request (CSR)

- The agent locally generates a private key for the workload. This private key is kept securely on the local machine and is never sent over the network.

- The agent then creates a Certificate Signing Request (CSR) containing the public key and identity information for the service. It sends this CSR to the control plane’s CA.

3. Verification and Signing

- The control plane’s CA verifies the credentials and the identity presented in the CSR.

- If authorized, the CA signs the CSR using its intermediate CA certificate, generating a unique, short-lived X.509 workload certificate (e.g., valid for only 24 hours).

- The control plane also distributes the root of trust (the public CA certificate bundle) to all proxies in the mesh, allowing them to verify certificates issued by that CA.

4. Secure Distribution via SDS API

- The control plane sends the newly signed workload certificate back to the agent.

- The agent then delivers both the private key (stored locally) and the new signed certificate to the Envoy proxy via a secure local channel, typically a Unix Domain Socket (UDS), using the SDS API. This keeps the key material secure and ensures it never leaves the host.

5. Automated Rotation

- The agent continuously monitors the expiration of the workload certificate.

- Well before the certificate expires (e.g., halfway through its validity period), the agent automatically initiates a new CSR process and obtains a fresh certificate using the same process.

- The proxy dynamically updates its certificates and private keys without requiring any service downtime or pod restarts.

This automated system means developers don’t have to worry about the complexities of PKI management, private key protection, or certificate expiration outages.

The Secret Discovery Service (SDS) API securely delivers certificates and private keys to Envoy proxies primarily through a combination of a secure, local communication channel (a Unix Domain Socket) and a robust update mechanism.

The SDS API is part of the broader xDS suite of APIs that allow the control plane to dynamically configure the data plane proxies.

1. The Use of Unix Domain Sockets (UDS)

The most critical security mechanism in the SDS delivery process is the use of a Unix Domain Socket (UDS) for communication between the local agent and the Envoy proxy.

- Local Communication Only: UDS communication bypasses the standard TCP/IP network stack entirely. This means the sensitive private keys and certificates never touch the external network. The data transfer happens entirely within the host machine’s memory space (or a dedicated local filesystem path).

- Isolation: The UDS path typically has strict file system permissions, ensuring that only processes with the necessary privileges (the local agent and the Envoy proxy) can access the socket.

- No Network Eavesdropping: By using UDS, the risk of network-based attacks like man-in-the-middle (MitM) attacks or network sniffing during the secret delivery process is completely eliminated.

2. The Role of the Local Agent

The local agent (which could be part of the service mesh functionality, like the Istio Agent or SPIRE Agent) acts as the secure intermediary:

- It authenticates with the remote control plane CA over a secured (mTLS) connection to request and receive the signed certificate.

- It generates the private key locally and keeps it on the host machine.

- It then exposes a local SDS server via the UDS endpoint for the Envoy proxy to connect to.

3. Dynamic and Asynchronous Updates

Envoy uses the SDS API to dynamically fetch and update secrets (certificates and keys) without requiring a restart or downtime.

- Pull or Push Mechanism: Envoy can either initiate a request (pull) for a specific secret name via the SDS API or the SDS server (local agent) can push updates to the proxy in a streaming fashion.

- Immediate Application: Once a new certificate and key pair is received, Envoy can immediately begin using them for new connections, making certificate rotation seamless and frequent.

- Security Posture: This dynamic updating capability allows for the use of very short-lived certificates, which is a strong security practice. Short-lived certificates minimize the window of opportunity for an attacker to use a compromised certificate.

In summary, the SDS API’s primary security benefit is the use of a local, out-of-band UDS channel for the final delivery of the highly sensitive key material, combined with automated, frequent rotation that limits exposure.

Using Unix Domain Sockets (UDS) for inter-process communication (IPC) between a service mesh agent and an Envoy proxy on the same host offers significant performance benefits in terms of latency and throughput compared to using TCP over the loopback interface (localhost).

The primary reason for this performance gain is that UDS bypasses most of the operating system’s network stack.

Key Performance Implications

| Feature |

|---|

| Unix Domain Sockets (UDS) | TCP (Loopback) | |

|---|---|---|

| Network Stack | Bypasses much of the stack. | Uses the full TCP/IP stack. |

| Data Flow | Data copies happen directly in the kernel memory space via shared memory or a file buffer. | Data is encapsulated into packets with headers, checksums, and routing logic, even for local delivery. |

| Latency | Significantly lower (benchmarks show ~50% lower latency). | Higher due to protocol overhead. |

| Throughput | Often 30% to 50% higher throughput (Queries Per Second). | Lower than UDS for local communication. |

| Context Switches | Incurs fewer CPU context switches. | Incurs more context switches. |

Why UDS is Faster

- Avoids Network Overhead: UDS avoids the encapsulation and decapsulation of network packets, header creation, checksum calculations, and routing decisions required by the TCP/IP stack.

- Direct Data Transfer: The kernel can often copy data directly from the sender’s application buffer to the receiver’s application buffer (or use shared memory mechanisms), which is much more efficient than managing a network stream.

- Reduced CPU Cycles: Less work means less CPU usage per transaction, which can be critical in high-throughput microservice environments where every microsecond and CPU cycle counts.

Unix Sockets – 12 Days of Performance – REVSYS

How much faster is it? Quite a bit faster actually. This redis benchmark shows a roughly 40% boost for simple GET and SET operatio…

REVSYS

Performance of unix sockets vs TCP ports

Sep 21, 2013* 2 Answers. Sorted by: 54. UNIX domain sockets should offer better performance than TCP sockets over loopback interfa…

Unix & Linux Stack Exchange

Context of SDS

For the Secret Discovery Service (SDS) API specifically, performance is important but perhaps not the single most critical factor compared to general service-to-service data plane communication.

- Infrequent Use: SDS calls primarily happen during service startup and then only during scheduled certificate rotations (e.g., every few hours or minutes). They are not used for every single application request.

- Small Payloads: The data transferred (certificates and keys) is relatively small.

- Security First: The primary motivation for using UDS for SDS is security (ensuring private keys never touch the network stack).

While the performance benefits of UDS are substantial in benchmarks and high-load scenarios like database access (PostgreSQL, Redis show 30-50% gains), for the specific task of SDS certificate delivery, the main drivers are security and architectural best practices, with performance being an added, desirable side effect.

While Unix Domain Sockets (UDS) offer significant performance and security benefits for Inter-Process Communication (IPC) on the same host, they do come with several drawbacks that limit their use in certain scenarios.

1. Limited to Same-Host Communication

The most significant limitation of UDS is that it only works for processes running on the same machine/host.

- No Network Transparency: Unlike TCP sockets, UDS cannot be used for communication between applications running on different physical servers, virtual machines, or even different Kubernetes nodes.

- Architectural Constraints: This restricts architectural flexibility. If you need a backup service on a different node to immediately take over a communication link, you can’t use UDS for that connection; you must fall back to TCP/IP.

2. Lack of Standardization Across Operating Systems

While UDS is a standard part of POSIX-compliant systems (Linux, macOS, BSD), there are compatibility nuances:

- Windows Support: Windows has its own named pipes system which serves a similar purpose, but UDS support was only added relatively recently (Windows 10/Server 2019 via AF_UNIX) and might not be as mature or universally supported across all Windows services and tools. Cross-platform application development needs a fallback mechanism (like TCP loopback) for Windows environments.

- Socket File Management: UDS communication relies on a socket file being present in the filesystem (e.g.,

/tmp/envoy.sock). This file must be properly managed:- It needs appropriate filesystem permissions set correctly.

- It must be cleaned up if the application crashes or restarts unexpectedly, or subsequent startups might fail because the “file is in use”.

-

3. Debugging and Tooling Limitations

Standard networking tools are heavily geared towards TCP/IP, which makes UDS debugging less straightforward.

- Standard Tools Don’t Work: Tools like

netstat,wireshark, ortcpdumpare designed to analyze network interfaces and IP packets; they generally cannot inspect traffic flowing over a UDS. - Custom Monitoring: Monitoring UDS communication requires specific support within the application or custom tooling that can understand filesystem sockets, increasing the complexity of observing application behavior.

4. Security Risks (If Filesystem is Compromised)

While UDS is secure because it stays off the network, its security relies entirely on local filesystem permissions.

- Local Access is Key: If an attacker gains local root access or access to the specific user account running the services, they can access the socket file and potentially read or write data, whereas TCP/IP would still require network-level access or firewall breaches.

In summary, UDS is an excellent high-performance, secure choice for communication within a single host, but its fundamental limitation of being non-routable and the minor tooling/management drawbacks mean it cannot be a universal replacement for TCP/IP communications.

For debugging applications using Unix Domain Sockets (UDS), standard network analysis tools like tcpdump are ineffective because UDS traffic bypasses the network stack. Instead, specialized utilities that focus on system calls, file descriptors, and local socket statistics are required.

Here are the best tools for debugging UDS-based applications:

1. ss (Socket Statistics)

ss is the modern replacement for the deprecated netstat command and is excellent for getting an overview of all active sockets, including UDS.

- Usage:

ss -x: Displays information about all Unix domain sockets.ss -xp: Shows UDS sockets and the associated process (PID/Program name) that opened them.ss -xa | grep <socket_path>: Filters for a specific socket path.

- Benefit: Quickly tells you if the socket is listening (

LISTEN), connected (ESTABLISHED), and which process owns it, which helps diagnose basic connectivity or file permission issues.

2. lsof (List Open Files)

Since UDS are treated as files by the operating system, lsof is a powerful tool for seeing which processes have a specific socket file open.

- Usage:

lsof -U: Lists all open Unix domain sockets.lsof <socket_file_path>: Shows which process is using a specific socket file (e.g.,lsof /tmp/envoy.sock).

- Benefit: Helps identify if the correct process is bound to the socket, or if a previous application crash left an orphaned socket file.

3. strace (System Trace)

strace is an indispensable low-level tool that traces all system calls made by a process. This allows you to observe the exact sendmsg(), recvmsg(), bind(), listen(), and connect() calls that an application is making.

- Usage:

strace -f -e trace=network -p <PID>: Traces network-related system calls for a running process (including UDS calls).strace -e trace=open,socket,connect,bind,recvmsg,sendmsg -p <PID>: Provides a more granular view of the socket lifecycle and data transfer attempts.

- Benefit: Provides detailed insights into what the application is trying to do with the socket, revealing low-level errors like “permission denied” during a

connect()call that might otherwise be hidden by application-level error handling.

4. socat (Socket CAT)

socat is a versatile command-line utility that can establish bidirectional data streams between many different types of data sources and sinks, including UDS. It’s an excellent tool for manual testing or acting as a middleman.

- Usage:

- Listen on a UDS and dump traffic to stdout:

socat -UVS LISTEN:/tmp/test.sock - Connect to a UDS and send a string:

echo "hello" | socat -U -CONNECT:/tmp/test.sock

- Listen on a UDS and dump traffic to stdout:

- Benefit: Allows developers to manually interact with a UDS endpoint to confirm if it’s alive, accepts connections, and processes data correctly, without needing the other application to be running.

5. unixdump (eBPF-based Sniffer)

For advanced analysis, unixdump is an eBPF-based utility that can passively capture all traffic across Unix domain sockets in Linux hosts.

- Usage:

unixdump -i <interface> -p <PID>: Captures UDS traffic system-wide or filtered by process ID.

- Benefit: It functions like

tcpdumpfor UDS, allowing full packet capture and analysis of the data payload flowing between processes, which is impossible with standard network sniffers.

When debugging UDS communication using strace, you are looking at the raw interactions between the application and the Linux kernel via system calls. sendmsg() and recvmsg() are the generic system calls used for sending and receiving data over various types of sockets, including UDS.

Interpreting their output involves understanding the arguments passed to the kernel and the values returned by the kernel.

Example strace Output

First, here is a typical output format you might see:

sendmsg(3, {msg_name={sa_family=AF_UNIX, sun_path="/tmp/envoy.sock"}, msg_namelen=19, msg_iov=[{iov_base="my_secret_data", iov_len=14}], msg_iovlen=1, msg_controllen=0, msg_flags=0}, 0) = 14

And for receiving:

recvmsg(3, {msg_name={sa_family=AF_UNIX, sun_path="/tmp/envoy.sock"}, msg_namelen=128, msg_iov=[{iov_base="my_secret_data", iov_len=1024}], msg_iovlen=1, msg_controllen=0, msg_flags=0}, 0) = 14

Interpretation Breakdown

The output provides the function name, its arguments, and the return value.

1. File Descriptor (3 in the examples)

sendmsg(3, .../recvmsg(3, ...: This is the file descriptor (FD) that the application is using to refer to the opened socket connection.- Debugging Tip: You can use

lsof -p <PID>to confirm which FD corresponds to which socket file if you have multiple connections open.

2. The msghdr Structure ({...})

The second argument is a pointer to the msghdr structure, which describes the message being sent or received. This is where most of the relevant debugging information lies:

msg_name={sa_family=AF_UNIX, sun_path="..."}:- This indicates the destination or source address of the message.

AF_UNIXconfirms it’s a Unix Domain Socket communication.sun_pathprovides the path of the socket file. This is crucial for verifying the application is connecting to the intended socket file.

msg_iov=[{iov_base="...", iov_len=...}]:- This is the I/O Vector—the actual data buffer.

iov_baseshows a snippet of the data being transferred (ifstraceis run with the right flags, like-s <bytes>).iov_lenshows the length of the data buffer.

msg_controllen,msg_flags: These typically remain zero for basic UDS communication but can indicate advanced features like sending file descriptors over a socket if set.

3. Flags (0 in the examples)

The third argument is an optional set of flags (e.g., MSG_DONTWAIT for non-blocking I/O). A simple 0 means standard behavior.

4. The Return Value (= 14 in the examples)

The number after the equal sign is the system call’s result code.

- Positive Value (e.g.,

= 14): Success. This number indicates the number of bytes successfully transferred in that single call. A successful transmission of 14 bytes matches theiov_lenvalue in the arguments. - Negative Value (e.g.,

= -1): Failure.stracewill usually print an error message next to the-1, such as= -1 ECONNREFUSED(connection refused),= -1 EPIPE(broken pipe, likely the other side closed the connection), or= -1 EACCES(permission denied, likely a filesystem permission issue on the socket file).

-

By reading these outputs, you can quickly verify if the correct data is being sent to the right path and if the kernel is encountering any fundamental errors during the communication process.

Securing Unix Domain Sockets (UDS) in a multi-tenant environment requires strict isolation measures, as all communication occurs on the same host machine. A multi-layered approach is necessary to prevent one tenant from accessing another’s communication channels.

Here are the best practices for securing UDS in multi-tenant environments:

1. Enforce Strict Filesystem Permissions

UDS security relies primarily on standard Linux Discretionary Access Control (DAC) permissions, just like regular files.

- Dedicated Directories: Place each tenant’s UDS files in a dedicated, isolated directory structure (e.g.,

/var/run/tenant_A/). - Restrict Directory Access: Ensure the parent directory has minimal permissions. Only the specific user account associated with that tenant’s processes should have read/write/execute permissions on that directory.

- Minimal Socket Permissions: Set socket file permissions to the bare minimum required (e.g., owner-only read/write,

chmod 0600). - Dedicated User/Group IDs: Avoid shared user accounts. Run each tenant’s services under a unique, non-privileged user and group ID (UID/GID). This is fundamental to isolating processes in a shared host environment.

2. Utilize Mandatory Access Control (MAC)

Relying solely on DAC (standard filesystem permissions) can be risky if a process is compromised with root privileges. Mandatory Access Control (MAC), implemented via Linux Security Modules (LSMs) such as SELinux or AppArmor, provides a crucial second layer of defense.

- Policy Enforcement: MAC policies enforce strict, centralized rules that an application owner cannot override. An administrator defines exactly which processes can access which socket paths.

- Confinement: Even if a tenant’s application is fully compromised, the MAC policy can prevent the compromised process from reading or writing to socket files belonging to another tenant’s service.

- Container Integration: In containerized multi-tenant environments (like Kubernetes), these policies can be integrated at the container or pod level, providing robust isolation between tenants sharing the same physical node.

3. Leverage Container/Namespace Isolation

Modern multi-tenant platforms utilize virtualization and containerization to provide strong isolation boundaries.

- Containerization: Use container runtime environments (Docker, containerd) that provide process and filesystem isolation via namespaces and cgroups. Each tenant’s workload should run in its own isolated container.

- Ephemeral Sockets: Generate and manage UDS files within the ephemeral storage of the container instance. This ensures the socket and its related permissions are destroyed when the tenant’s workload finishes.

- Kubernetes Best Practices: In a Kubernetes cluster, use strict Network Policies to restrict cross-namespace communication and use Pod Security Standards to enforce least privilege principles for each tenant’s workload.

4. Application-Level Authentication and Authorization

Do not assume a connection to a UDS is inherently trusted. Add authentication mechanisms within your application logic.

- Peer Credentials Check: The Linux kernel offers a mechanism (

SO_PEERCREDsocket option) to retrieve the UID, GID, and PID of the process connected to the UDS. Applications should verify these credentials upon connection to confirm the peer’s identity. - Tenant ID Validation: In your application logic, associate every request with a specific tenant ID and strictly enforce that the connected user ID matches the data they are attempting to access.

By combining filesystem permissions, mandatory access controls, platform isolation, and application-level checks, you can create a secure UDS communication channel suitable for a multi-tenant environment.

Production Application ID

Identifies your application in select Square API and SDK calls.

The SO_PEERCRED socket option is a Linux-specific, read-only mechanism used to securely retrieve the credentials (PID, UID, and GID) of the process on the other end of a connected Unix Domain Socket (UDS). The credentials are provided by the kernel itself and cannot be spoofed by the connecting process.

The Process of Using SO_PEERCRED

The general process involves using the getsockopt() system call on the connected socket from the server side.

1. Server Listens and Accepts a Connection

The server application sets up a UDS and listens for incoming connections. When a client connects, the server accepts the connection, which yields a new, dedicated socket file descriptor for that specific client connection.

2. Call getsockopt() with SO_PEERCRED

Once the connection is established (and typically after the server has accepted the connection), the server application makes a call to the getsockopt() function using the new client file descriptor.

The key parameters for this call are:

sockfd: The file descriptor of the connected client socket.level: Set toSOL_SOCKET(Socket Level).optname: Set toSO_PEERCRED.optval: A pointer to a buffer (astruct ucredin C) where the kernel will write the credentials.optlen: A pointer to the size of the buffer (sizeof(struct ucred)).

3. Kernel Populates the ucred Structure

The Linux kernel looks up the peer process associated with that specific socket connection and securely populates the provided struct ucred with the credentials that were in effect at the time the connection was established:

pid: The process ID of the peer process.uid: The effective user ID of the peer process.gid: The effective group ID of the peer process.

4. Server Application Validates Credentials

The getsockopt() call returns a status code (0 on success, -1 on failure). If successful, the server application can then read the pid, uid, and gid from the populated ucred structure and use them for authorization logic:

- It can check if the UID matches the expected user for that service.

- It can verify the GID is part of an allowed group.

If the credentials do not match the expected values, the server can immediately close the connection.

Example in C

c

#define _GNU_SOURCE /* Required for struct ucred definition */

#include <sys/socket.h>

#include <sys/un.h>

#include <stdio.h>

#include <unistd.h>

// Assuming 'client_fd' is an established UDS connection file descriptor

struct ucred creds;

socklen_t len = sizeof(creds);

if (getsockopt(client_fd, SOL_SOCKET, SO_PEERCRED, &creds, &len) == -1) {

perror("getsockopt(SO_PEERCRED) failed");

} else {

printf("Peer credentials:\n");

printf(" Process ID (PID): %d\n", creds.pid);

printf(" User ID (UID): %d\n", creds.uid);

printf(" Group ID (GID): %d\n", creds.gid);

}

Use code with caution.

This method is highly effective because the kernel is the trusted third party guaranteeing the identity of the connecting process, without requiring any application-level authentication protocols.

This Python example demonstrates how a UDS server can use the SO_PEERCRED option to get the connecting client’s User ID (UID) and enforce that only a specific, expected user is authorized to interact with the socket.

This requires the socket and os modules.

Server Application Code (uds_server.py)

python

import socket

import os

import struct

import pwd

# Define the socket path and the authorized UID

SOCKET_PATH = '/tmp/secure_app.sock'

# Define who is authorized to use this socket (e.g., user 'app_user')

AUTHORIZED_USER = 'app_user'

def get_uid_from_username(username):

"""Helper to get UID from username using built-in OS module."""

try:

user_info = pwd.getpwnam(username)

return user_info.pw_uid

except KeyError:

print(f"User '{username}' not found.")

return None

EXPECTED_UID = get_uid_from_username(AUTHORIZED_USER)

if os.path.exists(SOCKET_PATH):

os.remove(SOCKET_PATH)

print(f"Authorized UID set to: {EXPECTED_UID}")

# Create a UDS socket

server = socket.socket(socket.AF_UNIX, socket.SOCK_STREAM)

server.bind(SOCKET_PATH)

server.listen(1)

# Ensure only the owner can access the socket file itself

os.chmod(SOCKET_PATH, 0o600)

print("Server listening...")

while True:

conn, addr = server.accept()

print(f"Connection established (FD: {conn.fileno()})")

# --- Authorization Step using SO_PEERCRED ---

try:

# Get the credentials structure from the kernel

# We need to unpack the structure: 'iii' for 3 integers (pid, uid, gid)

creds = conn.getsockopt(socket.SOL_SOCKET, socket.SO_PEERCRED, struct.calcsize('iii'))

pid, uid, gid = struct.unpack('iii', creds)

print(f"Peer credentials received: PID={pid}, UID={uid}, GID={gid}")

if uid == EXPECTED_UID:

# Authorized: proceed with communication

print("AUTHORIZATION SUCCESSFUL: Proceeding with request.")

conn.sendall(b"Access Granted. Welcome!\n")

data = conn.recv(1024)

if data:

print(f"Received: {data.decode('utf-8')}")

else:

# Unauthorized: reject connection immediately

print(f"AUTHORIZATION FAILED: UID {uid} is not authorized.")

conn.sendall(b"Access Denied. Unauthorized UID.\n")

conn.close()

continue

except Exception as e:

print(f"Failed to get peer credentials: {e}")

conn.close()

continue

conn.close()

Use code with caution.

Client Applications (Testing Authorization)

To test this, you need to run the server as one user and attempt to connect as a different user.

Authorized Client (uds_client_authorized.py)

This will run as the AUTHORIZED_USER and succeed:

python

import socket

SOCKET_PATH = '/tmp/secure_app.sock'

client = socket.socket(socket.AF_UNIX, socket.SOCK_STREAM)

try:

client.connect(SOCKET_PATH)

print("Connected successfully.")

print(f"Server response: {client.recv(1024).decode('utf-8')}")

client.sendall(b"Hello from authorized user!")

except socket.error as e:

print(f"Connection failed: {e}")

finally:

client.close()

Use code with caution.

Unauthorized Client (uds_client_unauthorized.py)

This will run as a different user (e.g., root, or your personal user account if app_user is different) and be rejected by the server:

python

import socket

SOCKET_PATH = '/tmp/secure_app.sock'

client = socket.socket(socket.AF_UNIX, socket.SOCK_STREAM)

try:

client.connect(SOCKET_PATH)

print("Connected successfully.")

print(f"Server response: {client.recv(1024).decode('utf-8')}")

except socket.error as e:

# Connection might be immediately closed by server after rejection

print(f"Connection failed or closed by server: {e}")

finally:

client.close()

Use code with caution.